AI in Video Verification: Deepfake Detection, Fraud Prevention, and Assisted Workflows

Digital onboarding, remote account servicing, and high value customer journeys increasingly rely on video. At the same time, generative AI has made impersonation cheaper, faster, and more convincing. Gartner has warned that by 2026, deepfake attacks against face biometrics will push 30% of enterprises to treat identity verification and authentication solutions as unreliable in isolation, which is a clear signal that “single control” stacks will not hold up.

This blog breaks down what modern video fraud looks like, what “deepfake detection” really means in practice, and how regulated institutions can design assisted workflows that combine AI signals with human decisioning to reduce fraud without destroying user experience.

1) What changed: deepfakes moved from novelty to operational fraud

Deepfakes are no longer limited to pre recorded clips. We now see real time or near real time manipulation used in fraud and social engineering, including multi person video calls designed to pressure staff into transfers.

A widely reported example: a deepfake driven video call scam in Hong Kong led to the transfer of HK$200 million (about £20 million), according to a report citing Hong Kong police. The World Economic Forum later highlighted a related case involving the engineering firm Arup, describing how a staff member was deceived during a video call and transferred $25 million.

Separately, Europol has repeatedly warned that AI powered voice cloning and live deepfakes are amplifying organized crime capabilities, enabling new forms of fraud and extortion.

What this means for banks and regulated businesses: video as a channel is not “unsafe,” but the controls must evolve from simple visual checks to multi-signal verification and step up decision-making.

2) Threat model: the main fraud paths in video verification

Here is a practical taxonomy you can use when designing controls.

A) Presentation attacks (classic spoofing)

Examples: printed photo, replayed video on another screen, mask attacks, or simple “camera at a screen” replays.

This category is precisely what Presentation Attack Detection (PAD) aims to stop. NIST has published a major evaluation report that quantified the performance of passive, software based PAD algorithms across many submissions, helping institutions understand how different approaches behave and how error rates vary by attack instrument type.

Takeaway: PAD is necessary, but not sufficient once attackers start using synthetic media and injection techniques.

B) Deepfake and synthetic media

Examples: face swap, reenactment, lip sync, voice cloning paired with video, or fully synthetic video.

This is where “looks real” becomes a liability. Deepfakes reduce reliance on human judgment and can exploit trust, urgency, and authority cues during live interactions.

C) Injection attacks (the remote verification killer)

Injection means the attacker does not show a spoof “to the camera.” Instead, they feed manipulated content into the software pipeline using virtual cameras, hook processes, or man in the middle techniques.

NIST has discussed injection attack patterns in the context of biometric systems, including examples like face reenactment, face swap, morphed images, and synthetic sources.

Takeaway: if your liveness relies only on “what the camera sees,” injection is the bypass you must assume attackers will attempt.

D) Social engineering wrapped around video

Even with good AI detection, scammers often win by manipulating people and processes: urgent approvals, “CEO style” pressure, exception handling, or targeting new staff.

UK Finance reporting shows impersonation scams can be high value per case, which is one reason attackers like them.

3) Deepfake detection: what it is (and what it is not)

Deepfake detection is not a single feature. In robust systems it is a layered set of tests, each aimed at a different failure mode.

Layer 1: Media integrity and capture integrity

Goal: ensure the video stream is authentic and captured from a real camera path.

Signals:

- detection of virtual camera usage

- codec and metadata anomalies

- device attestation signals (where available)

- sensor and motion consistency checks

- capture SDK controls that reduce tampering surface

Why it matters: injection attacks can defeat purely visual liveness if the source is synthetic but “clean.”

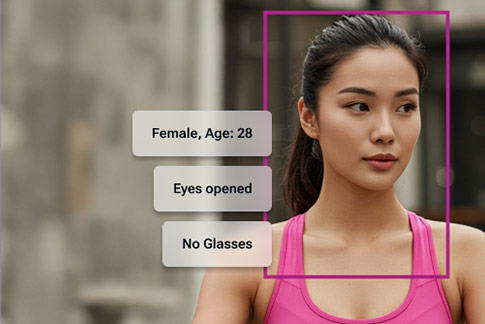

Layer 2: Face and motion based liveness

Goal: detect whether a real human is present.

Approaches:

- passive liveness (texture, frequency artifacts, reflectance cues)

- active liveness (prompted actions, randomized challenges)

- hybrid approaches

Independent evaluations like NIST’s work on PAD are useful reference points when selecting vendors or benchmarking internal models

Layer 3: Deepfake specific artifacts and consistency checks

Goal: detect manipulation even when a real person is present.

Signals commonly used:

- temporal inconsistencies in skin texture, edges, and lighting

- physiological signals (micro movements, pulse related cues) where feasible

- audio visual mismatch and lip sync anomalies

- face geometry drift across frames

- GAN fingerprinting style cues (varies over time)

Layer 4: Identity and risk context

Goal: stop fraud that “passes liveness” but fails trust.

Signals:

- document verification results and document integrity checks

- device fingerprint and behavioral risk

- velocity, geo anomalies, session reputation

- known compromised identity signals

4) Fraud prevention strategy: move from single checks to adaptive journeys

If deepfakes can beat one control, the winning strategy is to make fraudsters beat many different controls, and to raise friction only when risk warrants it.

A simple adaptive decision model (usable in real deployments)

Step 1: Baseline checks (low friction)

- passive liveness

- media integrity checks

- face match confidence above threshold

- basic document checks (if onboarding)

Step 2: Risk scoring

Assign a session risk score using:

- liveness confidence

- injection suspicion

- device reputation

- behavioral signals

- history and velocity

Step 3: Step up only when needed

If risk is medium to high:

- active liveness challenge with randomized prompts

- secondary factor verification

- knowledge based or possession based confirmation (policy dependent)

- human review or live agent assisted verification

This is where assisted workflows matter most.

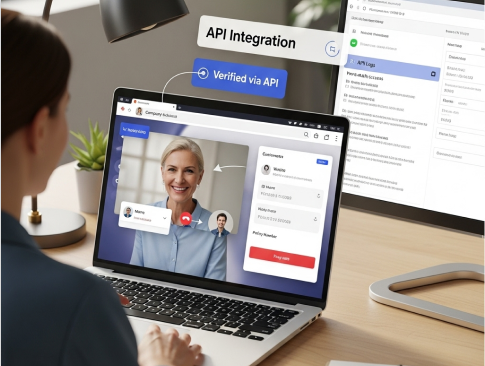

5) Assisted workflows: the human plus AI model that actually scales

Assisted workflows are not “manual review for everything.” They are structured escalation paths where AI does the heavy lifting and humans handle exceptions.

Where humans outperform AI

- interpreting edge cases where policy, intent, or context matters

- spotting social engineering patterns in conversation flow

- resolving mismatches with additional questioning and document checks

- applying regulatory or institution specific rules

Where AI should lead

- continuous liveness and integrity scoring

- flagging anomalies in real time

- standardizing evidence capture and audit trails

- reducing false positives by using multi signal correlation

A recommended assisted workflow for regulated onboarding

Risk band | What the user experiences | What the bank does |

Low | smooth flow, passive checks | auto approve with audit logs |

Medium | short step up, additional prompt | auto approve if step up passes, else queue review |

High | routed to live agent | agent sees AI reasons, requests extra evidence, records decision |

This structure improves both security and customer experience because most genuine users stay in low-friction paths, while suspicious sessions get human attention.

6) Controls that matter most against deepfake enabled fraud

Control 1: Make injection expensive

- prefer secure capture components where feasible

- detect virtual cameras and synthetic sources

- correlate device signals with stream characteristics

- monitor tampering indicators

Control 2: Use PAD plus deepfake detection, not one or the other

PAD addresses classic spoofs. Deepfake detection addresses synthetic manipulation. You want both.

NIST’s PAD evaluation work can help anchor internal conversations around measurable performance and failure modes.

Control 3: Build step up paths for high value actions

Even if onboarding is strong, fraud often happens at:

- adding beneficiaries

- changing payout destinations

- resetting credentials

- unlocking accounts

- high value withdrawals

If you apply video verification to these events, ensure step up logic is stricter than onboarding.

Control 4: Design for auditability and explainability

Regulated environments need:

- clear reason codes for escalations

- evidence retention rules

- operator decision logs

- model monitoring, drift checks, and periodic revalidation

7) Metrics that prove the system works (and keep improving)

Track these continuously:

- fraud capture rate by attack type

- false reject rate (good users blocked)

- average handling time for escalations

- step up completion rate

- injection suspicion rate and top indicators

- model drift indicators and new fraud patterns observed

Also track “process fraud,” meaning cases where policy exceptions or staff overrides were exploited.

8) Designing AI-Led Video Verification for Real-World Risk

As video becomes a core channel for onboarding and account servicing, it is important to frame video verification correctly.

Video verification is not simply video calling. In regulated environments, it is a controlled and auditable workflow designed to capture evidence, assess risk, and support compliance requirements. The objective is not visual confirmation alone, but defensible verification outcomes that can withstand fraud investigations and regulatory scrutiny.

AI plays a critical role by enabling continuous detection and risk scoring across liveness, media integrity, and behavioral signals. However, decisions should remain adaptive. Low-risk interactions can be approved automatically, while higher-risk sessions should trigger step-up checks or assisted review. This approach balances fraud prevention with user experience.

The most resilient verification systems follow a layered design. They combine deepfake and synthetic media detection, presentation attack detection (PAD), protection against injection attacks, and assisted workflows for edge cases. This model aligns with warnings from law-enforcement bodies such as Europol, which has highlighted the increasing use of AI-powered impersonation by organized crime and the growing need for stronger verification standards.

For banks and regulated businesses, the takeaway is clear: trust in video verification comes from system design and procedural rigor, not from any single control or model.